About seven years ago, a Fortune 100 corporation began a three-year, $2 million organizational experiment. The goal was to gain a sustainable competitive advantage by learning faster than its competitors. The company planned to accomplish this by means of a “secret weapon” — systems thinking — that would enable management teams throughout the organization to achieve measurable improvement in organizational performance. It seemed plausible to the core project team that the organization could measure its learning in dollars and its improvement in return on capital employed.

But when they assessed the results of the effort several years later, they had to admit that, although there were many successes at the project level, the program did not achieve the organization-wide impact they originally intended. The problems seemed to be centered around implementation. While much effort had been put into selecting issues that were critical to the organization, and to designing robust models around those issues, scant attention had been placed on transferring the learning to a larger context. As a result, the insights had little impact beyond the original project teams.

Determining Success or Failure

To be successful, implementation cannot begin after a project is completed. It must start before the initiation of the project — as the team discusses its expectations, anticipated outcomes, and measures for success — and be integrated into every step in the process. If a team is intentional about its project design up front, and then compares its results with the intended outcomes, even project “failures” can be turned into learning opportunities that will improve the quality of thinking, communicating, and decision-making across the organization. So, for example, the implementation pitfalls experienced by the Fortune 100 company could have ultimately benefited the organization if each project had been evaluated in the context of a theory about what the expected results would be.

While every team must craft its own learning processes, there are some general guidelines that can help a team more effectively bring systems thinking out of the workshop and into the work-place. To make this transition, two stages in the implementation process are critical: designing the intervention (front-end work), and evaluating the outcomes (back-end work).

Front-End Work: Designing the intervention

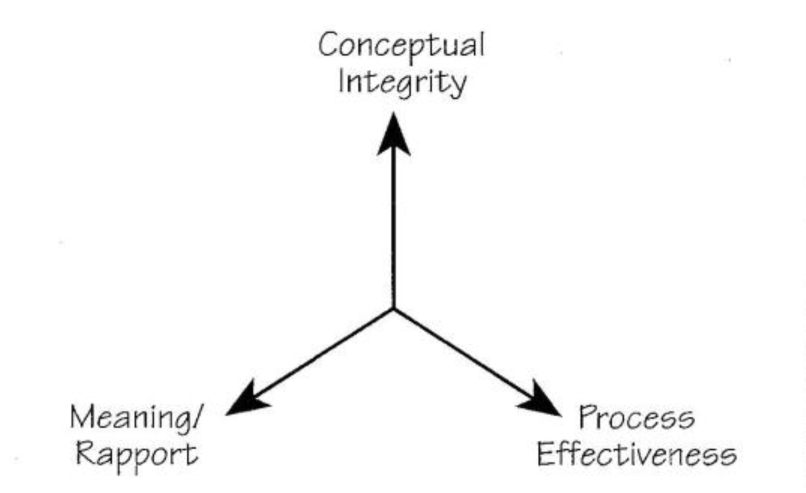

A starting point for designing a modeling intervention is to consider the purpose and use of the model. What type of model do you want to create? What sort of impact do you want it to have in the organization? How do you want to structure the model-building process? These three criteria are captured in the “Design and Evaluation Framework,” which provides a schema for structuring a modeling process so that it is most effective for the particular audience, issue, and needs of the group (see “Design and Evaluation Framework”).

To understand how these criteria can help frame the modeling project and implementation efforts, let’s look at each axis individually:

Conceptual Integrity. What type of model you want to build will have a tremendous impact on the overall project design. For example, expert models are characterized by a high degree of detail complexity and are geared toward accurate representations of system interactions (high on conceptual integrity). These models are built by modeling experts for expert recommendation. Models for learning, on the other hand, tend to be more aggregate, have less detail complexity, and represent situations that are closer to the level that people think about them. Thus they are lower on the conceptual integrity axis.

Process Effectiveness. The Process Effectiveness axis measures issues such as the ease of use of the model, and the suitability of the process to the actual situation and time constraints of the team. Do you want a highly interactive model that will be used as part of a learning laboratory throughout the company (high on process effectiveness)? Will it be used by technical experts in the company, who are less interested in ease of use than the availability of underlying data or assumptions (low on process effectiveness)? Or will it be part of an Executive Information System, which will be used in a very specific way by top managers in the company to assist in decision-making and scenario testing (medium-high)?

Meaning/Rapport. The Meaning/ Rapport axis measures the team involvement in the modeling process itself. This is where you want to think about how the model will be built, and what methods will be used to disseminate the insights gained from the modeling project. Do you want to build an expert model, and have recommendations made to the team who will then be charged with implementing them (low on rapport)? Do you want the modeling project to be highly interactive, involving people throughout the organization (high on rapport)? Or will it be best to confine the modeling effort to a core team, and then create a series of learning laboratories to share the insights with others in the organization (medium high)?

Using the Framework

The Design and Evaluation Framework should be used in the planning stages of a project to make intentional decisions about how a team will design and implement the model. When used in this way, it can help structure the process and indicate where resources should be placed for maximum effectiveness. For example, if a team decides to hire someone to build an expert model, the need for team buy-in would not be necessary (low Meaning/Rapport), while the need to understand the model-building process would not be important (very low Process Effectiveness). However, Conceptual Integrity would have to be at the highest level, meaning the model would need to be thoroughly tested and calibrated against actual data.

In a modeling for learning intervention, the most important initial criteria would be an emphasis on Meaning/ Rapport (medium to high). Without meeting the team where they are by using their language and addressing their core issues, the project could come to a dead stop. This model must also have enough Conceptual Integrity (low to medium) to be credible and useful. But the validity of the model will be less important than Process Effectiveness (medium), since most of the team learning will occur during the model development process. In this situation, creating a greater shared meaning and a common language for the team may be a more important objective than getting the “right” answers.

To ensure that an intervention is successful on all dimensions, we should begin by identifying which axis is most critical for success. Then we can look at how to leverage that advantage into success on the other axes. By considering how all three criteria will play out in a given intervention, we can be in a better position to determine which tools will be most effective in designing a successful project. For example, a project that is high on Conceptual Integrity will, in most cases, involve a model or robust causal loop diagram. If we feel, however, that Meaning/Rapport are also important to the project, team-building activities and perhaps some use of dialogue should probably be used to complement the “harder” tools of systems thinking.

We can visualize the sequence of tools as an ever-expanding spiral across all three axes. A spiral implementation strategy will help the team choose a sequence of tools or processes (e.g., hexagons, dialogue, scenario planning, simulation modeling, etc.) that will maximize the effectiveness at each step. Combining the tools in a sequence where each tool is used for its strongest benefit will create a more meaningful total intervention for the team. The larger the “toolbox” the team is drawing from, the greater the chance of implementation success.

However, not all implementation strategies should address all three axes equally. In fact, there may be some natural conflicts between the framework axes. For example, if the project involves designing a model that is high on Conceptual Integrity, such as a complex mathematical model, it may not be possible to make the model building process visible (Process Effectiveness).

Back-End Work: Evaluating the Intervention

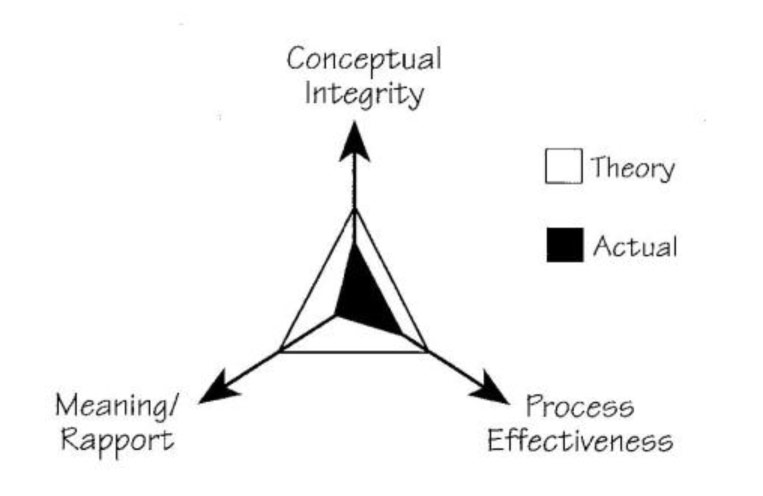

At the conclusion of the intervention, the Design and Evaluation Framework can be used to measure the impact and effectiveness, and also to identify next steps. In this process, the team revisits the framework by comparing the design intent (theory) to the actual results (see “Theory vs. Actual” on page 4). The questions that should be addressed at this point include: Did the actual outcome of the project match the intent? Why or why not? What caused the actual results to differ from the expected? Were there any serious shortcomings? If so, how could we have prevented them? Were there any pleasant surprises? If so, how could we build them into our designs in the future? (See “Implementation Failures” on page 5 for a description of three common pitfalls.)

For example, a medium-sized specialty goods manufacturer was troubled by a persistent problem in inventory management that was beginning to impact its ability to compete. The president of the company commissioned a team to look into the problem. After some initial conversation, the design team decided that, because the issue had widespread implications throughout the company, they needed to create a model that could be used easily by mid-and senior-level managers to explore the dynamics of inventory management. They knew they had a limited budget for the project, so they used the Design and Evaluation Framework to determine where their attention should be focused.

The team felt that it was very important for the model to capture the basic dynamics of inventory movement, since it was a critical issue for the company (high on Conceptual Integrity). Since the model would be used by groups of people throughout the company, they also felt that it would need to be relatively easy to use (medium to high on Process Effectiveness). But to save time and money, they decided that only a few key people would need to be involved in the actual design of the model (low on Meaning/Rapport).

Over the next few weeks, the model was developed, and the modeling team’s recommendations were shared with groups of managers throughout the organization via a series of workshops. Much to the disappointment of the core group, most of the time in the workshops was spent discrediting the model and pointing fingers at who was “really responsible for our inventory problems.”

Design and Evaluation Framework

To rank high on the Conceptual Integrity axis, the model must:

- Capture what is happening in reality

- Be self-consistent

- Be validated and calibrated

- Support double-loop learning

To rank high on the Meaning/Rapport axis, the model must:

- Engage the enthusiasm of the group

- Improve group understanding

- Create ownership of results and buy-in for implementation

- Support a change in the mental models of the group

To rank high on the Process Effectiveness axis, the model must:

- Make the process explicit and visible

- Create shared team meaning

- Use team/industry specific vocabulary

- Define parameters based on available resources (time, money, size of team, space, etc.)

When the team gathered to evaluate the project, they realized that their failure to gain buy-in resulted from the initial decision not to involve more people in the modeling process. By focusing their efforts on creating a “valid” model of the issues, they had not done what was really needed—to provide an opportunity for the company to examine the different mental models around what levels and kinds of inventory were critical to the success of the operation, and how they could structure their inventory system most effectively. Because the model did not represent shared meaning, there was little buy-in across the company.

In the final analysis, the team saw that the company would have been better served if they had sacrificed some of the conceptual integrity of the initial model to emphasize development of shared meaning (medium to high on meaning/rapport). With this knowledge, the team started over again. This time, they brought the management teams together up front to explore the key issues and provide input to the modeling process. These conversations became the basis of a simple model that was then turned into a learning laboratory. The management teams were brought back together to explore the model and test alternate approaches, resulting in a shared strategy for solving their inventory problems.

Theory versus Actual

The Design and Evaluation Framework can be used to measure the impact and effectiveness of a project by comparing the design intent (theory) to the actual results.

Recommendations for Implementation Success

Creating an explicit design theory helps frame an intervention and will help leverage the learning from the project. But in addition to having an overall framework, there are tangible actions that a project team can also take to ensure implementation success:

1. Link Learning and Implementation. Learning and implementation are inseparable. While you are thinking about what to do next, you are actually in the implementation phase — in other words, thinking is one step in implementation. And as you implement, you receive feedback from the system in the form of consequences from your actions, which provide a valuable opportunity to learn about the system.

“Those who do not learn from history are doomed to repeat it” applies to organizations as well as people. Too many companies don’t take the opportunity to reflect on implementation, and as a result relive history again and again—whether in cycles of boom and bust, growth and decline, or overproduction and stockouts. Experience is inevitable; learning is not. If an organization is to profit fully from its implementation efforts, it must “mine” its mistakes for all the insight they can provide.

Recommendation: Remember that learning takes place while you are designing interventions and working with specific tools, not just during the implementation phase. Maintain the flexibility to move back and forth between tools, often intentionally reiterating steps. Capture your learning as you go along. Analysis, implementation, and learning must all happen together.

2. Set Appropriate Project Boundaries. The temptation in any modeling project is continually to expand the scope of the project to capture the “whole” system. But because the absolute structure of any system is infinitely complex, the project can quickly bog down. For this reason, setting the correct boundary is a critical part of the project design. The fact that you can never see the whole system only makes it more important to be intentional about what you include in your field of view.

Recommendation: Clarify boundaries at the outset of the intervention. Be sure to include all meaningful information and perspectives. As with goal setting, make sure that the boundaries are specific, measurable, and attainable within the contexts of available resources and time.

3. Develop High-Performance Teams. As organizations move from closed systems, which are dependent on the direction of a single “leader,” to open systems, where leadership is shared among many individuals and functional areas, we need to make sure that teams can function effectively. One thing that makes it easier for individuals rather than teams to reach peak performance is that individuals know how they will react in stressful situations, and can adjust accordingly. Teams often implode under pressure, breaking down into hostile confrontation, dysfunctional aggressiveness, indecisiveness, and working (knowingly or unknowingly) at cross-purposes.

Teams can learn how to work together more effectively under pressure by practicing team learning skills such as surfacing mental models and suspending assumptions. In addition, practice can help a group learn how to surface productively the conflicts that might otherwise erupt under pressure, and help ensure that the group does not degenerate because of problems in their internal processes.

Recommendation: To build high-performance teams, create opportunities for teams to celebrate its success and risk failure in a safe setting. It is important to develop within the ream the cognitive capacity that exists in the brightest, most creative, and functional individuals.

4. Design an Appropriate Practice Field. If you have an absolutely clear idea of what’s going on, if there is no danger of error, if the outcomes are pretty clear, if you have done this before…don’t just stand there, do something!

But if you recognize possible signs of poor decision-making, if you sense a quick intuitive-level diagnosis, if you are not content with the risk of repeating past behavior…don’t just do something, stand there! This is the time when a practice field can be most useful.

Often we move too quickly from design to implementation. But it can be difficult to evaluate the outcomes of implementation, because the delay between cause and effect is often so lengthy that the impact is obscured. Practice fields such as learning laboratories enable managers to learn and make mistakes in a “safe” environment, and then transfer that learning into the real system. They provide an opportunity for teams to make their five to ten most important strategic blunders in the safety of a practice field.

Recommendation: Create a safe practice environment in which members can learn, build experience, and experiment with new strategies. Remember that the most effective practice fields are virtually risk-free and allow you to gain the insights of many years of experience in a short period of time.

5. Reach Completion. This final step is perhaps the most important and yet most neglected aspect of implementation. It is important to close the learning cycle by evaluating the intervention: Where did we succeed? Where did we fall short of expectations? What can we learn from the process?

Bringing completion to a project sometimes means simply making a list of all the things that were not completed. This brings us back to recommendation #1: Connect Learning and Implementation. By analyzing their experience, a team can once again link learning with implementation, and prepare for the next cycle of learning and implementation. Closing this circle makes it possible to create a “virtuous” cycle of continual improvement, instead of repeating past mistakes.

Recommendation: Make sure you complete all cycles of learning, all cycles of action, and all cycles of communication you initiate within the group.

Final Thoughts

Nothing can discredit a good idea or destroy the chance for significant change as much as poor implementation. The very best ideas or approaches can backfire if careful thought is not given to how they should be introduced for maximum effectiveness. Even worse, a poorly executed implementation plan can create organization-wide resistance to the particular methodology that was used. But by following an explicit design and evaluation process, you can better ensure that all projects — whether successful or not — contribute to an organization’s overall learning objectives.

David P. Kreutzer is president of GKA Incorporated. Virginia Wiley Is an associate and training director at GKA.

Editorial support for this article was provided by Colleen P. Lannon. The concept of using a “toolbox” metaphor to structure an implementation process has been drawn from the work of Tony Hodgson and Gary and Nella Chicoyne-Piper.

Implementation Failures

- Failure of the first kind involves overemphasizing conceptual integrity. This results in a valid model that has little buy-in and virtually no organizational learning or implementation.

- Failure of the second kind overemphasizes rapport. Projects that err in this direction risk producing lots of “fluff,” but no real substance.

- Failure of the third kind happens when an organization spends all of its resources on process and ease of use, but does not achieve buy-in or conceptual integrity.

The key here is not to over-emphasize any one of the axis—Conceptual Integrity, Process Effectiveness, or Meaning/Rapport—to the exclusion of the other two. By considering the impact of focusing too narrowly on any one of the three areas, you can anticipate potential problems and create “course corrections” if a project starts to falter.