One of the biggest contributions that systems thinking can make is to help managers build theories about why things happen the way they do. Actually testing those theories requires tools such as computer simulation models, which enable you to see how different assumptions play out over time. But because creating a model can be expensive and time consuming, it realistically cannot be applied to every issue. So how can you determine when simulation is appropriate?

When to Simulate

In general, simulation modeling is useful for understanding complex relationships, for developing and testing specific policies, and for understanding the implications of long time delays on a problem or issue (see “When to Simulate,” April 1995). While these general guidelines give you a sense of why modeling can be useful, they don’t tell you when a particular problem you’re working on can benefit from simulation modeling.

That is why experienced practitioners watch for certain signals that indicate when it is time to move into simulation modeling. One such entry point is to listen for organizational “dilemmas.”

A “dilemma” occurs when a group is aware of multiple consequences of a policy or strategy, but there is no clear agreement around which consequence is strongest at what point in time. In such a situation—where there are strong passions around a specific issue and the organization or team is “stuck” at its current level of understanding—simulation modeling has the potential to be very effective. The following six-step process describes how modeling can be used to resolve such dilemmas.

1. Identify the Dilemma

The first step is to listen for situations where there are two different theories about the consequences of a decision (for more on this process, see “Using Systems Thinking ‘On-Line’: Listening for Multiple Hypotheses,” August 1995). Dilemmas are often characterized by one party strongly advocating for a decision or strategy, followed by public disagreement or private mumbling about how that action will cause just the opposite of the intended outcome.

For example, the plant management team of a major component supplier wanted to “load the plant”—that is, continually push sales in order to maximize the plant’s capacity usage. But others expressed concern that loading the plant might create other problems that would affect both quality and service.

2. Map the Theories

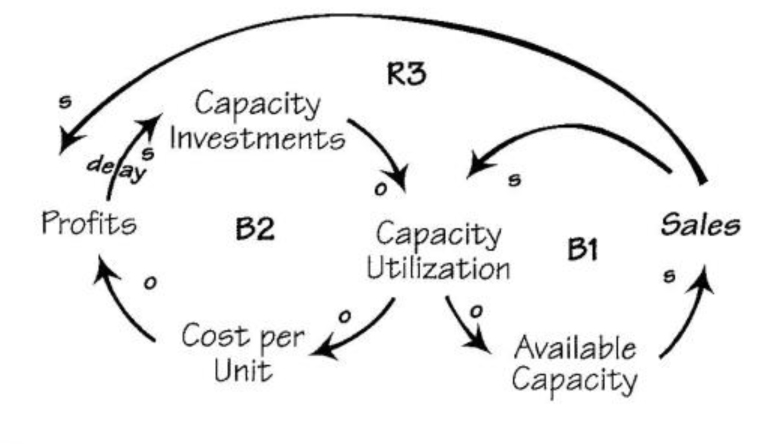

Once you have identified the dilemma, it is important to clarify the issues involved by explicitly mapping out each of the viewpoints using causal loop diagrams or systems archetypes. For example, in the plant capacity issue, the strategy behind loading the plant was to increase sales in order to maximize the capacity utilization of the plant, until the available capacity falls to zero and no more sales can be filled (B1 in “Theory 1: Loading the Plant”). The benefit of this strategy is twofold: by increasing sales, the plant will boost profits (R3); and by increasing the capacity utilization, the cost per unit will fall, which increases profits and allows more investment in plant capacity (B2).

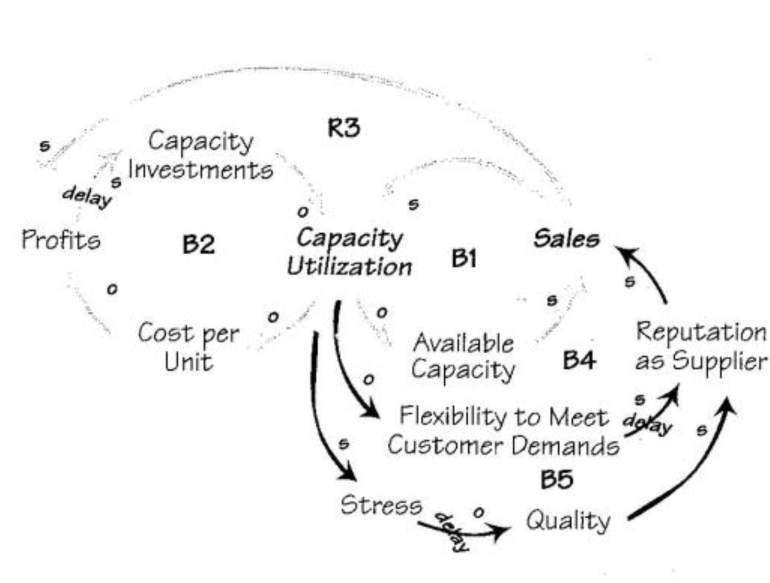

However, other people felt strongly that if plant utilization remained too high, the ability to respond to customer changes (flexibility) would go down, eventually hurting the company’s reputation as a supplier and leading to a decline in sales (B4 in “Theory 2: Unintended Side-Effects”). They also believed that loading the plant would cause the stress level in the organization to rise, eventually eroding quality and further hurting their reputation (B5).

3. Assess Dynamic Complexity

Some situations of “multiple hypotheses” resolve themselves when the different parties work together to map out each story and find that one is clearly more accurate. But in cases where both sets of interconnections are plausible and the uncertainty or disagreement still exists, further work is needed to resolve the dilemma. If the uncertainty is simply around a number (such as accurate cost data) or the probability of one outcome versus another, it is a static problem and techniques such as decision analysis may be appropriate. Simulation modeling, on the other hand, is most effective where there is some degree of dynamic complexity—where the link between cause and effect is subtle and the implications over time are not obvious.

To check for dynamic complexity, ask if the uncertainty or disagreement is around what feedback loop is dominant at what time—in other words, the long-term impact might be different than the short-term effects. If this is the case, then some degree of dynamic complexity exists and it is appropriate to move into simulation modeling. In the manufacturing example, a key area of uncertainty was the effect of various capacity utilization strategies on the company’s reputation (and therefore sales) over time. The importance of this time delay indicated a degree of dynamic complexity that could benefit from a simulation approach.

4. Developing the Simulation Model

At this point, you are now ready to move into the development of the model. First, you want to focus the model-building effort by asking, “What do we need to learn in order to be able to resolve the dilemma?” Stating the objective up front will guide the rest of the process.

Once you have established your objective, you can define the boundaries of the simulation model by identifying the key decisions (critical policies that the organization makes), the important indicators (what you need to see from the system to assess the decision), and the uncertainties (most fragile assumptions about the relationships or outside world) associated with the dilemma.

In the capacity utilization example, the parties recognized that the dilemma would be resolved when they knew both the short- and long-term impact of different capacity utilizations on sales and profits. Their primary decision was to select a particular utilization goal (desired production relative to capacity), which could be assessed by looking at the long-term behavior of sales and reputation. The company’s key uncertainties included demand and customer sensitivity, because they didn’t know exactly how the market would evolve, and the impact of different utilizations depended heavily on their assumptions about the customer’s sensitivity to price, quality, and flexibility.

Once you have established the focus and boundaries of the model, you are ready to build the simulation model by defining the relationships between important variables, such as the relationship between manufacturing flexibility and reputation in our plant capacity example. (For more on the actual mechanics of model building, see “From Causal Loop Diagrams to Computer Models—Part II,” August 1994).

5. Divergence: Testing the Assumptions

Once you have built the model, you can begin testing the different assumptions behind your causal theories to see the effect of those interrelationships over time. At this stage, you are attempting to be divergent—trying out many possible scenarios, any of which can lead to new questions and experiments.

Theory 1: Loading the Plant

Theory 2: Unintended Side-Effects

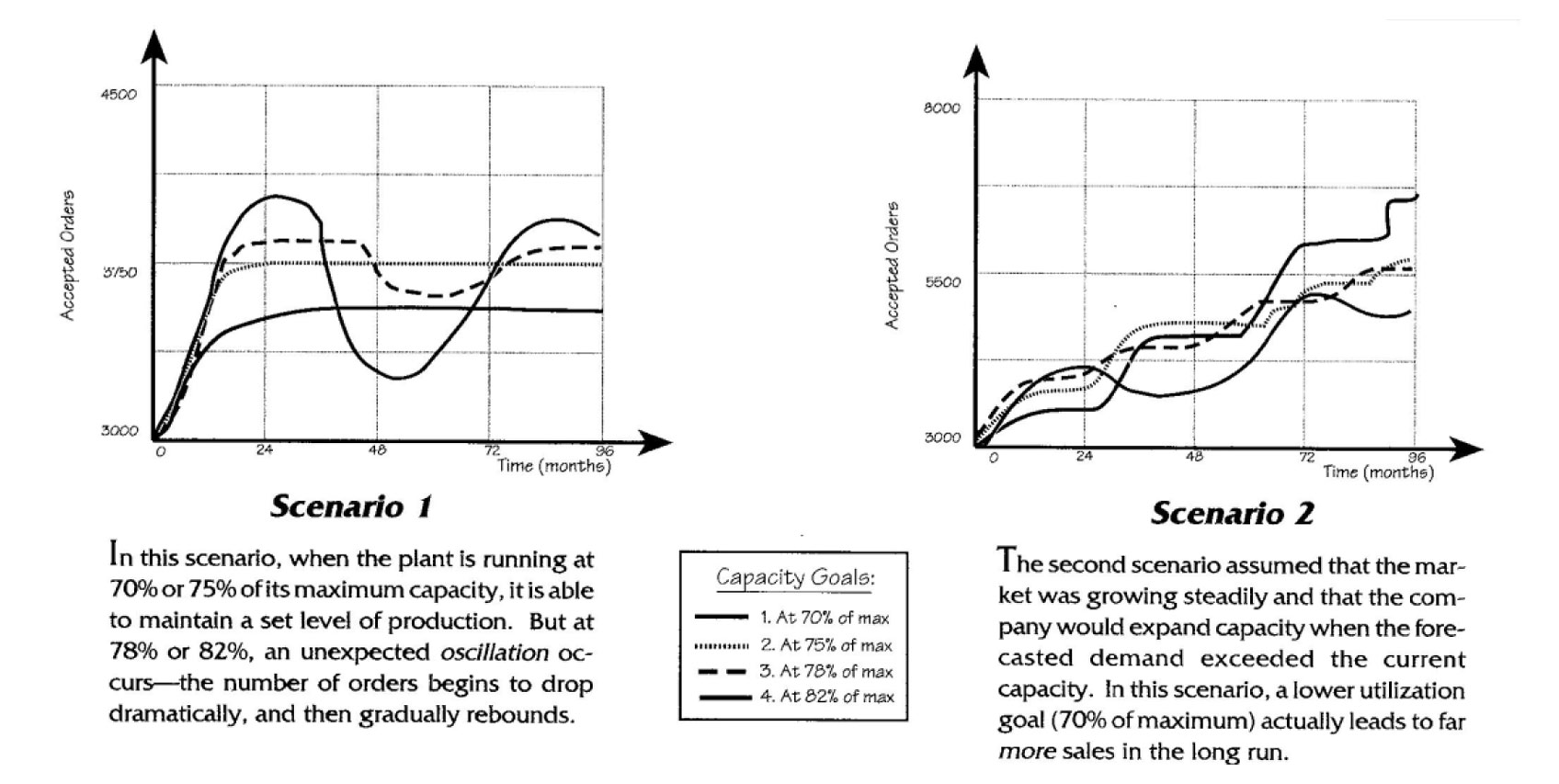

Using the simulator they had developed, the management team was able to test different capacity utilization levels, using various assumptions about the market and customer “sensitivity.” In the first scenario, the group made two simplifying assumptions: that there would be no change in overall market size, and that the organization could not build new capacity. They then ran the simulator, testing four different capacity utilization “goals”: 70%, 75%, 78%, and 82% of maximum possible capacity.

When they ran the simulation, they discovered that when the plant is running at 70% or 75% of its maximum capacity, it is able to maintain a set level of production. Bur at 78% or 82%, an unexpected oscillation occurred after a short period of time, the number of orders began to drop dramatically, and then gradually rebounded (see “Scenario 1” in “Capacity Utilization Scenarios”).

From these results, the group hypothesized that at higher capacity utilizations the plant is less flexible in meeting customer demands, which affects the company’s reputation and leads to a decline in sales. Once the number of orders falls below the utilization goal, the plant then has time to improve flexibility and quality. Over tune, its reputation and sales gradually rebound until once again it is in a situation with high capacity utilization but low flexibility — and the cycle begins again.

In the second scenario, the group tested the same four capacity utilization goals, but with new assumptions: that the market would grow steadily, and that the company would expand capacity when the forecasted demand exceeded the current capacity (sec “Scenario 21. To their surprise, when they ran this simulation, they found that a lower utilization goal actually leads to far more sales in the long run. From this they hypothesized that at a lower utilization, there is a greater unfilled demand, which leads to more optimistic forecasting and investment, more plant capacity, and a better reputation as a growing reliable supplier.

6. Convergence: Resolving the Dilemma

Testing various scenarios allows you to explore assumptions and gather data. But understanding more about behavior over time is only useful if it helps move toward resolution of the dilemma. Therefore, the divergent phase should be followed by a convergent phase, in which the group closes in on the policies that produce the most desirable short-term and long-term behavior for the most likely future scenarios.

In the plant capacity example, the team discovered that there was an optimal capacity utilization level, above which the organization created undesired oscillation. The resolution to their dilemma was to set capacity utilization at a level that balanced the need to load the plant with the need u, maintain flexibility and a high company reputation. In this case, the use of a simulation model enabled the team to productively address an issue that had been a long-standing dispute in the plant, and to develop a policy that was acceptable to all of the involved parties.

Don Seville is a research affiliate at the Mir Center for Organizational Learning and an associate with GKA Incorporated.

Many of these ideas emerged from conversations with Jack Homer of Horner Consulting. Inc., who also collaborated on the design of the model.

Editorial support for this article was provided by Colleen P. Lannon.

Capacity Utilization Scenarios